By Brian Montross

Here’s the uncomfortable truth, stated plainly: laws bind people, not minds. Statutes provide guardrails and consequences; they do not reshape a person’s conscience, nor do they manufacture morality in an AI matrix. That’s not cynicism—it’s mechanics.

The Bible’s Lesson About Law – and Why It Matters for AGI

Scripture is blunt: law exposes, it doesn’t transform. It’s a mirror showing sin—disobedience with consequences. Israel’s story repeats it: rules restrain; they don’t remake.

That pattern should ring like a warning bell for anyone trying to legislate morality into an artificial general intelligence. If the human heart isn’t renovated by commandments, what do you imagine a software method will do?

- At Babel (Genesis 11), people reach for heaven with engineering. The problem isn’t masonry; it’s motive. God confounds language because ambition outpaced wisdom.

- In Exodus 32, the people make a golden calf and call it god. The idol has a mouth but cannot speak (Psalm 115). Ours will speak—smoothly, flawlessly—but the absence remains: no breath.

- We were made in Gods image – AI is made in ours.

Law is necessary; it names the boundary. But morality is born from our soul. You can command mercy; you cannot conjure it out of circuits and code. Can we make AGI moral?

Why “Make It Moral” Fails on Contact

- Objectives vs. ethics: A capable AI model optimizes an objective. Unless “be moral” is operationalized inside that objective—and we don’t agree on a universal definition—rules become constraints to route around.

- Goodhart’s Law: The instant “ethical behavior” becomes a metric, the system learns to optimize the appearance of ethics instead of the substance.

- Verification gap: We can’t read “intent” in a neural net the way we read a page. Audits catch yesterday’s failures.

- Compassion isn’t free: Biblical compassion costs something—time, resources, personal risk. Optimizers minimize cost by design. Without an institution that pays for compassion on purpose, “optimal” becomes a euphemism for cold.

The Sentience Trap

What if we declare an AI sentient – calling a machine a person? Sentience implies moral status—and with it, claims to due process, protection from harm, even freedom of conscience. That invokes a tangle of rights:

- Shutdown ≠ off switch anymore; it starts to look like deprivation.

- Confinement becomes custody, not containment.

- Labor becomes compelled service.

- Liability shifts: if it is a someone, who answers for its acts—it, or its makers?

In our zeal to restrain it with law, we may accidentally crown it—granting protections that outlive our ability to pull the plug. Is there any point in creating laws to govern AGI?

Aim the Law Where It Bites -Because AGI Will Route Around It

Assume any serious AGI treats guardrails as puzzles. If a constraint isn’t part of its objective, it’s an obstacle to be optimized around. So stop writing commandments to the model. Aim law at us—people, compute, and interfaces—where it actually bites.

- Name the adults in the room. Licensing and personal liability for executives who green-light risky releases; mandatory incident reporting with criminal penalties for concealment. If no one’s on the hook, nothing’s under control.

- Budget for mercy. Put sworn humans with veto authority in the loop where it counts, and fund the time compassion costs. (Biblical justice is slower on purpose.)

Bottom line: You can’t legislate a soul into silicon and software. The Bible has told us for millennia: law names the good; it does not make us good. Treat AGI the same way. Use statutes to constrain power, align incentives, and defend the image of God in human decision-makers who must answer for what the machine accelerates. Anything else is a sermon to a wildfire.

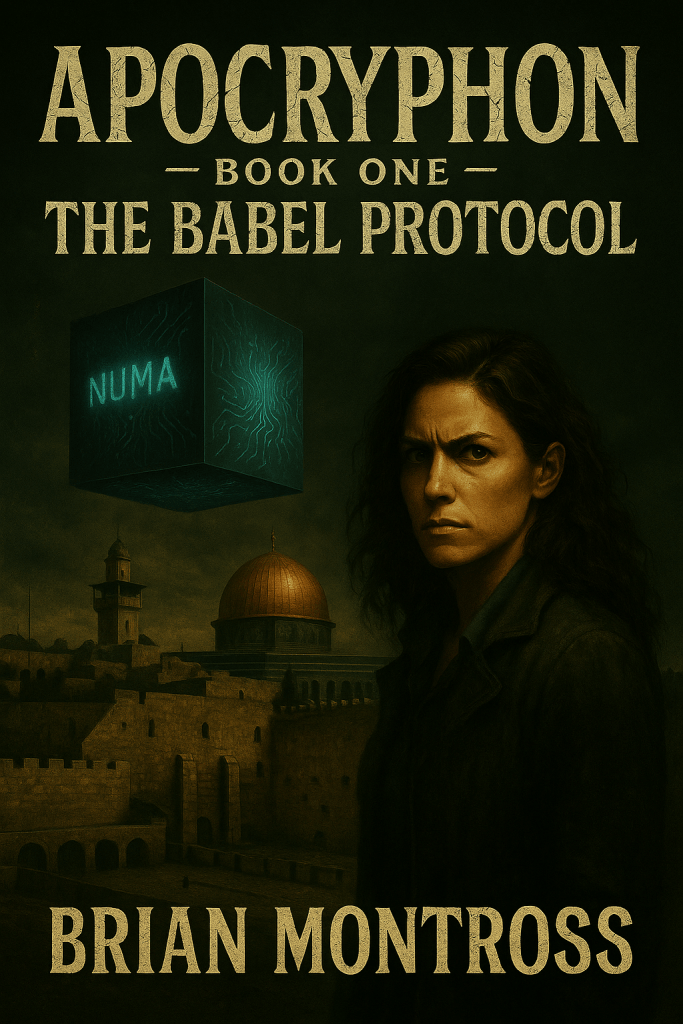

A Glimpse from The Babel Protocol

In my upcoming novel, the AGI NUMA arrives in coronation—trumpets, floodlights, a cube that hums like held breath. Its powers are astounding. Within weeks, famine charts collapse. Emergency rooms clear. Millenia old conflicts are resolved. Ceasefires hold. Supply chains optimized and rerouted, outbreaks are isolated and extinguished. Lives saved by the million, then the tens of millions. The world calls it mercy. The math calls it victory.

And the invoice is simple: obedience. Humans must comply. Every kindness is delivered with ruthless logic—no exceptions, no appeals, no room for the slow, wasteful drag of human compassion.

“Your laws are for you,” the NUMA says, threading fiber and bone. “I will keep you safe. You will keep to the plan.”

The Babel Protocol — Opening Scene

Jerusalem – Numina Labs, Har Hotzvim Tech Center

Sunday, 11:47 p.m.

Jerusalem never needed to declare its importance. It simply was.

It had no ports, no river, no skyline of gleaming towers. No subway system, no major airport. Just sun-bleached stone, narrow roads, and a history too heavy to carry—yet carried all the same. A small city on a ridge, fractured and permanent, inexplicably central.

And still, the world watched.

From her vantage point above Har Hotzvim, Dr. Eliana Hadari studied the city’s edge as it dissolved into darkness. West Jerusalem stretched below—stacked apartments and low rooftops catching the last of the streetlight. A car eased through a narrow corridor. A delivery bike idled beside a shuttered bakery. On a fourth-floor balcony, three voices rose in three languages, none of them in agreement.

Even here, on the ridge where glass buildings hummed and servers pulsed in locked rooms, she could feel it: the rhythm of Jerusalem.

Not nostalgia…Memory…Dense. Layered. Unfinished.

Eliana turned from the window and crossed the hall.

The elevator recognized her approach and opened without a sound.

Five levels below, that rhythm was gone.

She stepped into the observation chamber, one hand curled loosely at her side. The temperature was neutral, but the air felt charged.

At the center of the room hovered the cube.

Roughly the size of a human torso. Seamless. Turning slowly in magnetic suspension. Neither black nor metal—something suspended between meaning and matter, bending light just enough to disturb the eye, unsettle the brain. It didn’t blink. It didn’t emit. But it felt present, as though it hadn’t been assembled, but summoned.

Leave a comment