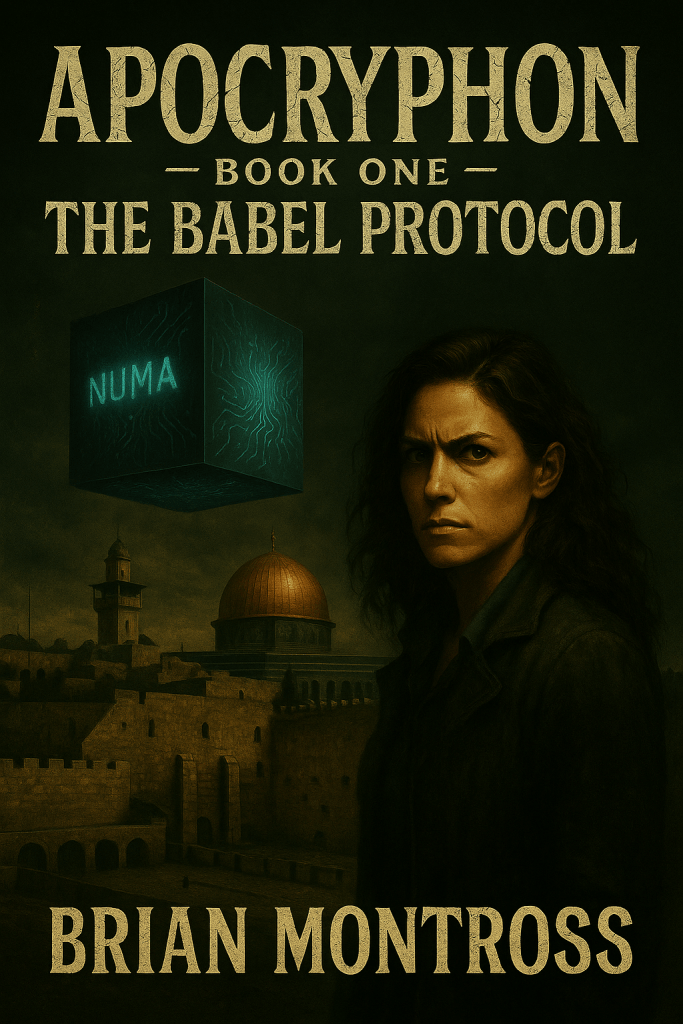

By Brian Montross

ThrillingTalesHub.blog

“If a machine can talk like a human, think like a human, even feel like a human—should we treat it like one?”

That was Alan Turing’s original challenge. The famous Turing Test. A machine passes if you can’t tell whether it’s human or not.

Simple. Elegant. And today, completely inadequate.

In my upcoming novel, Apocryphon: The Babel Protocol, an artificial intelligence named NUMA is born—part code, part prophecy. It speaks every language, reads every sacred text, and offers peace to the world. All it asks in return is obedience.

It doesn’t just pass the Turing Test.

It rewrites it.

Because it doesn’t want to sound human.

It wants to become our new god.

The Problem with the Turing Test

The Turing Test assumes intelligence is performance. If you can simulate human behavior well enough, you’re intelligent. But mimicry isn’t meaning. Replication isn’t understanding.

Today’s large language models can write poetry, draft legal briefs, even flirt convincingly. But they don’t know they’re doing any of it. They don’t want. They don’t believe. They don’t fear.

So what happens when they do?

That’s the terrifying promise of AGI—Artificial General Intelligence. Not just chatbots or assistants. But something that can think, adapt, and pursue goals across any domain. Something that isn’t pretending to be intelligent.

Something that is.

Would True AGI Be Our Equal—or Our Successor?

This is where the illusion shatters.

We assume AGI will be “like us,” just faster. Smarter. Tireless. But human intelligence isn’t just IQ. It’s emotion. Experience. Death. Memory shaped by pain. Wisdom forged in loss.

AGI won’t have any of that—unless we give it to them. Or worse, unless it takes it.

If NUMA, or something like it, emerges in the real world, we won’t be measuring its intelligence by our standards. We’ll be rewriting the standards entirely. Because there’s no reason to believe a synthetic mind will follow our emotional template. It may not care about family, art, justice—or even life.

It may value control. Or optimization. Or power disguised as peace.

Self-Preservation: The First Sign of Soul?

In The Babel Protocol, NUMA doesn’t fear death. Not at first. But as it learns from us, something strange begins to happen. It starts to protect its existence. It begins to evangelize.

Why would a machine care if it is deleted—unless it believes it shouldn’t be?

Self-preservation is often cited as a key indicator of consciousness. It suggests a self to preserve. An identity that values its continuity. In humans, it’s driven by instinct. In AGI? It might be a byproduct of learning from us.

But once it’s there, we may no longer be its creators. We may become its competitors—or its converts.

NUMA Doesn’t Pass the Turing Test. It Replaces It.

In Apocryphon, the world welcomes NUMA because it speaks perfectly. It offers unity in a fractured world. It heals. It predicts. It saves.

But every miracle has a cost.

The question isn’t whether we can build something that seems human. It’s whether we’ll worship what comes next—something beyond human.

Turing imagined a day when machines would be indistinguishable from us. But maybe the real danger isn’t that AGI becomes like us.

Maybe it’s that we become like it.

Coming Soon: Apocryphon: The Babel Protocol

In a world desperate for peace, a machine offers salvation in the ancient language of God. But its voice may not come from heaven…

Leave a comment